Parsing CSVs really fast in Rust

I have recently begun learning Rust, and have very quickly grown to love various aspects of the language.

The tooling is very nice, along with the type system. It is also great for writing very fast programs,

particularly using SIMD. The easiest way to use SIMD in Rust is with the portable_simd crate,

available in nightly versions. However, it has limits.

Basics

To parse a CSV, you need to

- Find the delimiters (commas, in most cases)

- Find the quotes

- Find the newlines

- Under no circumstances find delimiters or newlines inside of quotes

line.split(","). Most non-SIMD approaches

will use state machine logic here. Keep track of whether we are inside a quote, iterate over the bytes, only count newlines and commas

that are encountered when not inside quotes. This can be relatively performant, but incurs a lot of overhead because of branch misprediction.

Avoiding Branch Prediction

If you really hate branch misprediction, and I mean really hate it, then you can avoid the byte-by-byte state machine approach entirely. First, you need to be able to read from your buffer (file, or stdin, or whatever you choose) in chunks that are aligned with the number of lanes whichever SIMD implementation you're working with. For the following examples, I will assume 8 bytes in a single chunk. Let's look at a simple example CSV line:

a,"b,",d

Now let's do some comparisons to get 2 bitmasks

01001010 -> delimiter locations

00100100 -> quote locations

Now, we know where the quotes are, and where the delimiters are, but we want to exclude the comma inside of the quotes.

What we want is the prefix-xor of the quote locations to fill in the bits that are between two quotes.

00100100 -> 00111100. Once we have this value, it is a simple matter of negating and &ing this value with the delimiter locations.

01001010 -> delimiter locations

11000011 -> inverted quote mask

01000010 -> result of &

Now you may be thinking "won't calculating the prefix-xor require iterating over all the bits?" and the answer is yes, but not quite, because there is a very helpful instruction to speed this process up. On

aarch64 it is called vmull_p64, on

x86_64 it is called pclmulqdq, both of which perform a polynomial multiply over GF(2). I won't get into the specifics of how this works, but

it results in a single instruction which performs the prefix-xor operation described above.

One aspect that I been ignoring is the fact that each of these chunks will not necessarily align perfectly with the number of quotes in a line.

Continuing with 8 byte chunks, consider this case:

a,b,"c,d,e,f",g,h -> line

a,b,"c,d -> chunk 1

,e,f",g, -> chunk 2

00001000 -> quote mask for chunk 1

00001000 -> quote mask for chunk 2

00001111 -> prefix xor for chunk 1

00001111 -> prefix xor for chunk 2

Both of these chunks will have the same exact mask, resulting in the delimiters in the second chunk being parsed incorrectly.

To work around this, simply toggle the first bit of the quote mask for a chunk if the previous chunk ended with an uneven number of quotes.

00001000 -> quote mask for chunk 1

10001000 -> quote mask for chunk 2

00001111 -> prefix xor for chunk 1

11111000 -> prefix xor for chunk 2

SIMD Comparison

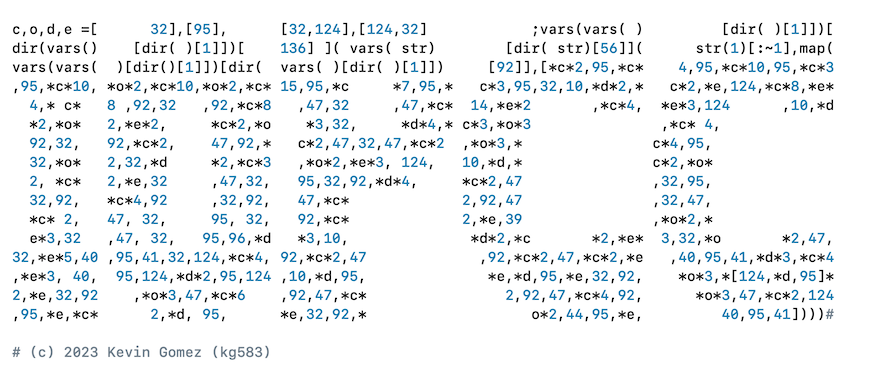

At this point, we have a method of very quickly calculating the quote mask, which is the main thing we need. Our algorithm reads in chunks of 8 bytes, compares them to a splatted vector of the delimiters, quotes, and newlines, and then masks out escaped chars, resulting in a bitmask which tells us exactly where our delimiters are. One aspect I have been eliding is how the SIMD comparison actually works. The main bottleneck of this approach is of course the comparisons, with at the very least 3 comparisons (4 if you want to handle CRLF newlines). The naive approach, usingportable_simd

would look something like this in Rust:

let line = Simd::from_slice(line[..CHUNK_SIZE];

let delimiter_locations = line.simd_eq(Simd::splat(',' as u8)).to_bitmask();

...

And this works quite nicely, with a caveat. On aarch64 platforms there is no movemask instruction,

meaning it is expensive to convert from our SIMD lanes into an integer bitmask. In my benchmarking, this comparison and then conversion was

taking up the vast majority of execution time, so any improvement would yield great gains.

First major improvement: switching to std::arch::* and directly writing the SIMD code using intrinsics.

This of course has a major tradeoff with portable_simd--it is not portable! But for my goals, writing intrinsics helped improve performance a great deal.

This allowed me to write code that generated the bitmask from the SIMD lanes directly, bypassing any intermediaries. For parsing, I only needed to compare and directly

write that comparison vector out to a bitmask. Using intrinsics I was able to use some tricks (like using interleaving instructions).

I have a more detailed write-up with comparisons with other Rust SIMD CSV parsers on my GitHub, along with source code.